Mistral AI Introduces Codestral Embed: A High-Performance Code Embedding Model for Scalable Retrieval and Semantic Understanding

Modern software engineering faces growing challenges in accurately retrieving and understanding code across diverse programming languages and large-scale codebases. Existing embedding models often struggle to capture the deep semantics of code, resulting in poor performance in tasks such as code search, RAG, and semantic analysis. These limitations hinder developers’ ability to efficiently locate relevant code […] The post Mistral AI Introduces Codestral Embed: A High-Performance Code Embedding Model for Scalable Retrieval and Semantic Understanding appeared first on MarkTechPost.

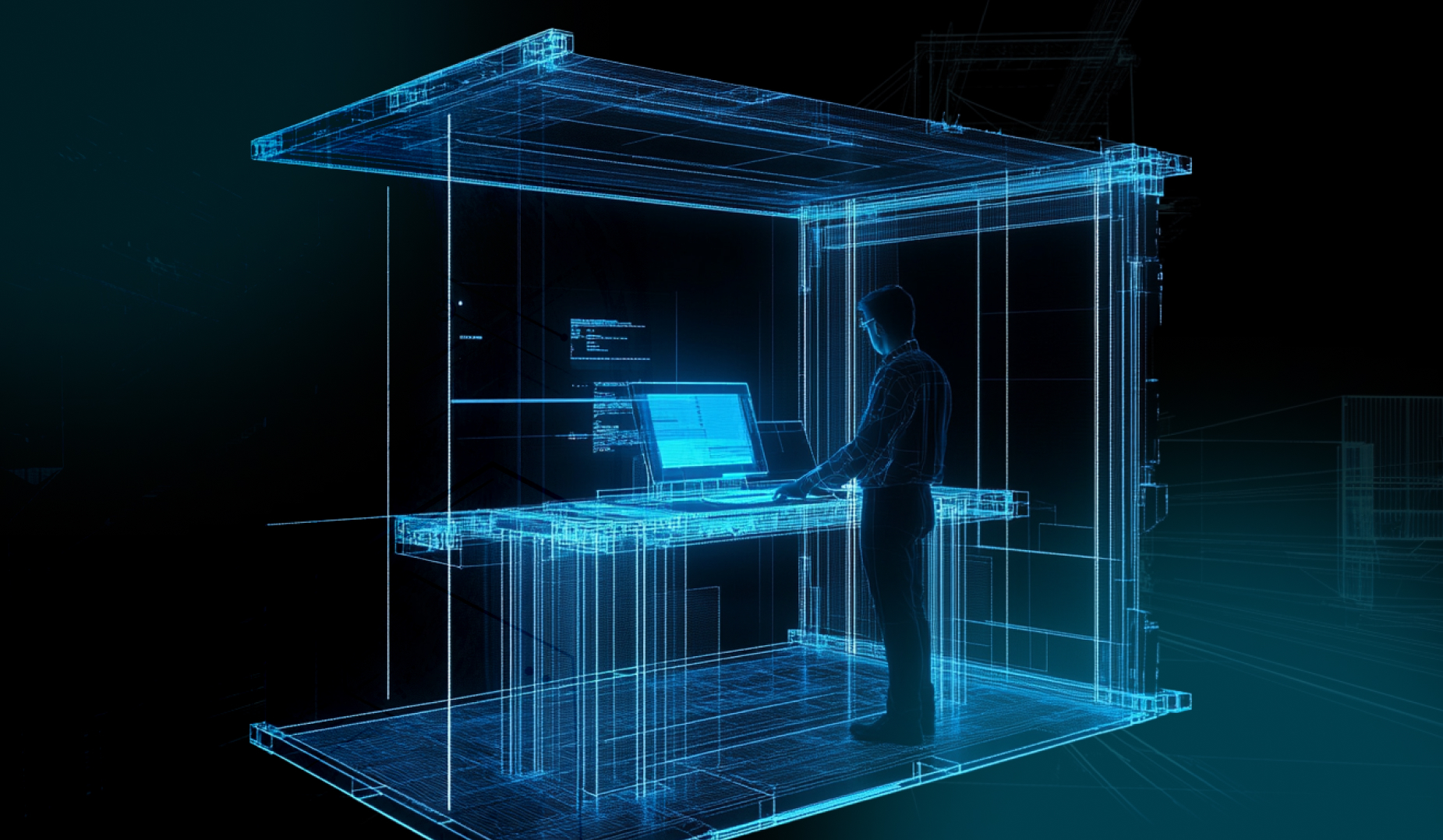

Modern software engineering faces growing challenges in accurately retrieving and understanding code across diverse programming languages and large-scale codebases. Existing embedding models often struggle to capture the deep semantics of code, resulting in poor performance in tasks such as code search, RAG, and semantic analysis. These limitations hinder developers’ ability to efficiently locate relevant code snippets, reuse components, and manage large projects effectively. As software systems grow increasingly complex, there is a pressing need for more effective, language-agnostic representations of code that can power reliable and high-quality retrieval and reasoning across a wide range of development tasks.

Mistral AI has introduced Codestral Embed, a specialized embedding model built specifically for code-related tasks. Designed to handle real-world code more effectively than existing solutions, it enables powerful retrieval capabilities across large codebases. What sets it apart is its flexibility—users can adjust embedding dimensions and precision levels to balance performance with storage efficiency. Even at lower dimensions, such as 256 with int8 precision, Codestral Embed reportedly surpasses top models from competitors like OpenAI, Cohere, and Voyage, offering high retrieval quality at a reduced storage cost.

Beyond basic retrieval, Codestral Embed supports a wide range of developer-focused applications. These include code completion, explanation, editing, semantic search, and duplicate detection. The model can also help organize and analyze repositories by clustering code based on functionality or structure, eliminating the need for manual supervision. This makes it particularly useful for tasks like understanding architectural patterns, categorizing code, or supporting automated documentation, ultimately helping developers work more efficiently with large and complex codebases.

Codestral Embed is tailored for understanding and retrieving code efficiently, especially in large-scale development environments. It powers retrieval-augmented generation by quickly fetching relevant context for tasks like code completion, editing, and explanation—ideal for use in coding assistants and agent-based tools. Developers can also perform semantic code searches using natural language or code queries to find relevant snippets. Its ability to detect similar or duplicated code helps with reuse, policy enforcement, and cleaning up redundancy. Additionally, it can cluster code by functionality or structure, making it useful for repository analysis, spotting architectural patterns, and enhancing documentation workflows.

Codestral Embed is a specialized embedding model designed to enhance code retrieval and semantic analysis tasks. It surpasses existing models, such as OpenAI’s and Cohere’s, in benchmarks like SWE-Bench Lite and CodeSearchNet. The model offers customizable embedding dimensions and precision levels, allowing users to effectively balance performance and storage needs. Key applications include retrieval-augmented generation, semantic code search, duplicate detection, and code clustering. Available via API at $0.15 per million tokens, with a 50% discount for batch processing, Codestral Embed supports various output formats and dimensions, catering to diverse development workflows.

In conclusion, Codestral Embed offers customizable embedding dimensions and precisions, enabling developers to strike a balance between performance and storage efficiency. Benchmark evaluations indicate that Codestral Embed surpasses existing models like OpenAI’s and Cohere’s in various code-related tasks, including retrieval-augmented generation and semantic code search. Its applications span from identifying duplicate code segments to facilitating semantic clustering for code analytics. Available through Mistral’s API, Codestral Embed provides a flexible and efficient solution for developers seeking advanced code understanding capabilities.

vides valuable insights for the community.

Check out the Technical details. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Mistral AI Introduces Codestral Embed: A High-Performance Code Embedding Model for Scalable Retrieval and Semantic Understanding appeared first on MarkTechPost.

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)

.png)