Voxel51’s New Auto-Labeling Tech Promises to Slash Annotation Costs by 100,000x

A groundbreaking new study from computer vision startup Voxel51 suggests that the traditional data annotation model is about to be upended. In research released today, the company reports that its new auto-labeling system achieves up to 95% of human-level accuracy while being 5,000x faster and up to 100,000x cheaper than manual labeling. The study benchmarked […] The post Voxel51’s New Auto-Labeling Tech Promises to Slash Annotation Costs by 100,000x appeared first on Unite.AI.

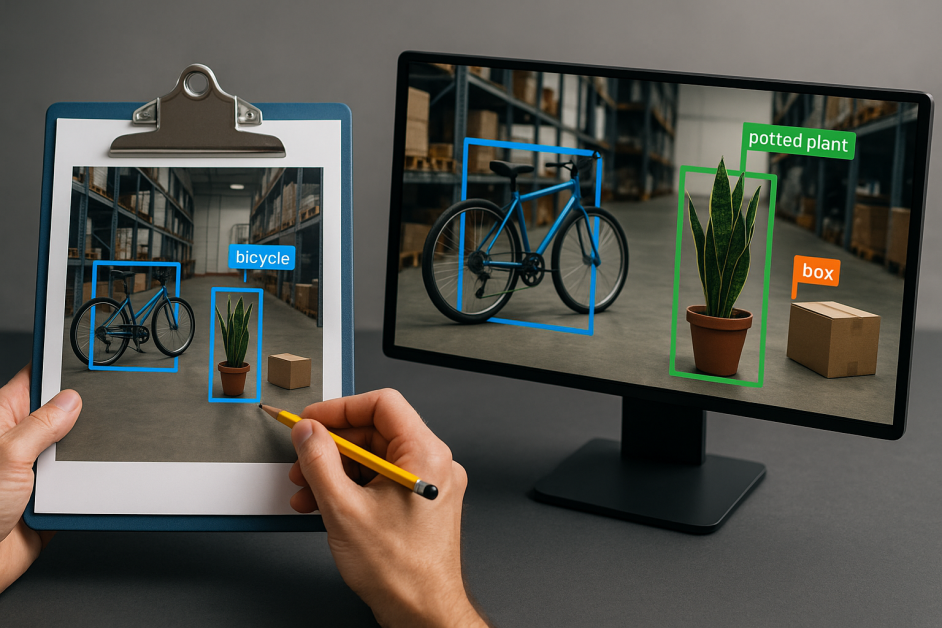

A groundbreaking new study from computer vision startup Voxel51 suggests that the traditional data annotation model is about to be upended. In research released today, the company reports that its new auto-labeling system achieves up to 95% of human-level accuracy while being 5,000x faster and up to 100,000x cheaper than manual labeling.

The study benchmarked foundation models such as YOLO-World and Grounding DINO on well-known datasets including COCO, LVIS, BDD100K, and VOC. Remarkably, in many real-world scenarios, models trained exclusively on AI-generated labels performed on par with—or even better than—those trained on human labels. For companies building computer vision systems, the implications are enormous: millions of dollars in annotation costs could be saved, and model development cycles could shrink from weeks to hours.

The New Era of Annotation: From Manual Labor to Model-Led Pipelines

For decades, data annotation has been a painful bottleneck in AI development. From ImageNet to autonomous vehicle datasets, teams have relied on vast armies of human workers to draw bounding boxes and segment objects—an effort both costly and slow.

The prevailing logic was simple: more human-labeled data = better AI. But Voxel51’s research flips that assumption on its head.

Their approach leverages pre-trained foundation models—some with zero-shot capabilities—and integrates them into a pipeline that automates routine labeling while using active learning to flag uncertain or complex cases for human review. This method dramatically reduces both time and cost.

In one test, labeling 3.4 million objects using an NVIDIA L40S GPU took just over an hour and cost $1.18. Manually doing the same with AWS SageMaker would have taken nearly 7,000 hours and cost over $124,000. In particularly challenging cases—such as identifying rare categories in the COCO or LVIS datasets—auto-labeled models occasionally outperformed their human-labeled counterparts. This surprising result may stem from the foundation models' consistent labeling patterns and their training on large-scale internet data.

Inside Voxel51: The Team Reshaping Visual AI Workflows

Founded in 2016 by Professor Jason Corso and Brian Moore at the University of Michigan, Voxel51 originally started as a consultancy focused on video analytics. Corso, a veteran in computer vision and robotics, has published over 150 academic papers and contributes extensive open-source code to the AI community. Moore, a former Ph.D. student of Corso, serves as CEO.

The turning point came when the team recognized that most AI bottlenecks weren't in model design—but in the data. That insight inspired them to create FiftyOne, a platform designed to empower engineers to explore, curate, and optimize visual datasets more efficiently.

Over the years, the company has raised over $45M, including a $12.5M Series A and a $30M Series B led by Bessemer Venture Partners. Enterprise adoption followed, with major clients like LG Electronics, Bosch, Berkshire Grey, Precision Planting, and RIOS integrating Voxel51’s tools into their production AI workflows.

From Tool to Platform: FiftyOne’s Expanding Role

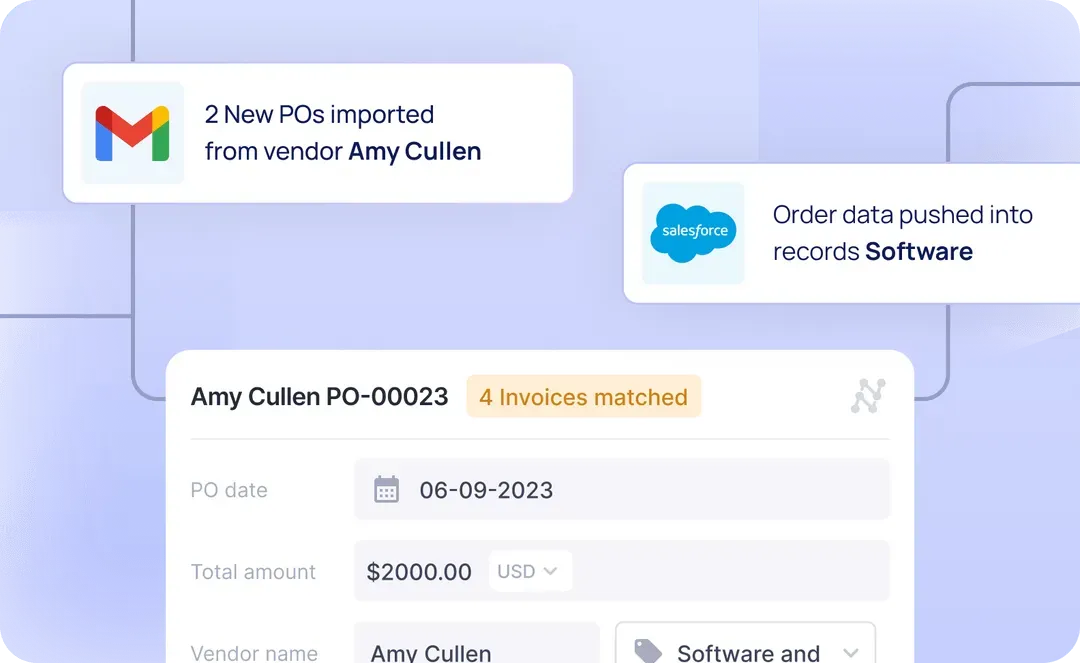

FiftyOne has grown from a simple dataset visualization tool to a comprehensive, data-centric AI platform. It supports a wide array of formats and labeling schemas—COCO, Pascal VOC, LVIS, BDD100K, Open Images—and integrates seamlessly with frameworks like TensorFlow and PyTorch.

More than a visualization tool, FiftyOne enables advanced operations: finding duplicate images, identifying mislabeled samples, surfacing outliers, and measuring model failure modes. Its plugin ecosystem supports custom modules for optical character recognition, video Q&A, and embedding-based analysis.

The enterprise version, FiftyOne Teams, introduces collaborative features such as version control, access permissions, and integration with cloud storage (e.g., S3), as well as annotation tools like Labelbox and CVAT. Notably, Voxel51 also partnered with V7 Labs to streamline the flow between dataset curation and manual annotation.

Rethinking the Annotation Industry

Voxel51’s auto-labeling research challenges the assumptions underpinning a nearly $1B annotation industry. In traditional workflows, every image must be touched by a human—an expensive and often redundant process. Voxel51 argues that most of this labor can now be eliminated.

With their system, the majority of images are labeled by AI, while only edge cases are escalated to humans. This hybrid strategy not only cuts costs but also ensures higher overall data quality, as human effort is reserved for the most difficult or valuable annotations.

This shift parallels broader trends in the AI field toward data-centric AI—a methodology that focuses on optimizing the training data rather than endlessly tuning model architectures.

Competitive Landscape and Industry Reception

Investors like Bessemer view Voxel51 as the “data orchestration layer” for AI—akin to how DevOps tools transformed software development. Their open-source tool has garnered millions of downloads, and their community includes thousands of developers and ML teams worldwide.

While other startups like Snorkel AI, Roboflow, and Activeloop also focus on data workflows, Voxel51 stands out for its breadth, open-source ethos, and enterprise-grade infrastructure. Rather than competing with annotation providers, Voxel51’s platform complements them—making existing services more efficient through selective curation.

Future Implications

The long-term implications are profound. If widely adopted, Voxel51’s methodology could dramatically lower the barrier to entry for computer vision, democratizing the field for startups and researchers who lack vast labeling budgets.

Beyond saving costs, this approach also lays the foundation for continuous learning systems, where models in production automatically flag failures, which are then reviewed, relabeled, and folded back into the training data—all within the same orchestrated pipeline.

The company’s broader vision aligns with how AI is evolving: not just smarter models, but smarter workflows. In that vision, annotation isn’t dead—but it’s no longer the domain of brute-force labor. It’s strategic, selective, and driven by automation.

The post Voxel51’s New Auto-Labeling Tech Promises to Slash Annotation Costs by 100,000x appeared first on Unite.AI.

![[The AI Show Episode 149]: Google I/O, Claude 4, White Collar Jobs Automated in 5 Years, Jony Ive Joins OpenAI, and AI’s Impact on the Environment](https://www.marketingaiinstitute.com/hubfs/ep%20149%20cover.png)