A Comprehensive Coding Guide to Crafting Advanced Round-Robin Multi-Agent Workflows with Microsoft AutoGen

In this tutorial, we demonstrated how Microsoft’s AutoGen framework empowers developers to orchestrate complex, multi-agent workflows with minimal code. By leveraging AutoGen’s RoundRobinGroupChat and TeamTool abstractions, you can seamlessly assemble specialist assistants, such as Researchers, FactCheckers, Critics, Summarizers, and Editors, into a cohesive “DeepDive” tool. AutoGen handles the intricacies of turn‐taking, termination conditions, and streaming […] The post A Comprehensive Coding Guide to Crafting Advanced Round-Robin Multi-Agent Workflows with Microsoft AutoGen appeared first on MarkTechPost.

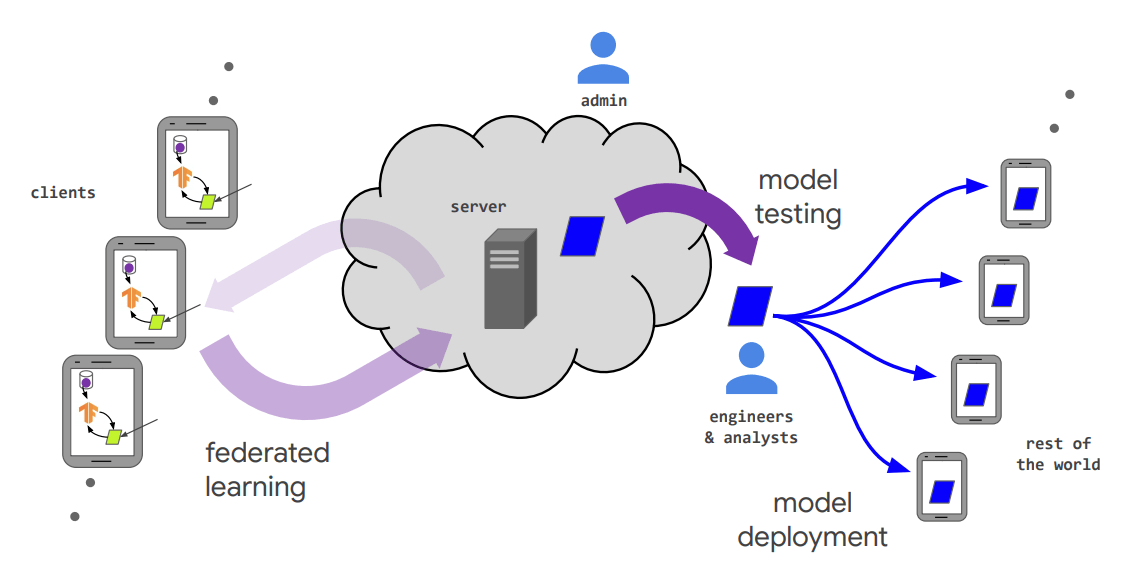

In this tutorial, we demonstrated how Microsoft’s AutoGen framework empowers developers to orchestrate complex, multi-agent workflows with minimal code. By leveraging AutoGen’s RoundRobinGroupChat and TeamTool abstractions, you can seamlessly assemble specialist assistants, such as Researchers, FactCheckers, Critics, Summarizers, and Editors, into a cohesive “DeepDive” tool. AutoGen handles the intricacies of turn‐taking, termination conditions, and streaming output, allowing you to focus on defining each agent’s expertise and system prompts rather than plumbing together callbacks or manual prompt chains. Whether conducting in‐depth research, validating facts, refining prose, or integrating third‐party tools, AutoGen provides a unified API that scales from simple two‐agent pipelines to elaborate, five‐agent collaboratives.

!pip install -q autogen-agentchat[gemini] autogen-ext[openai] nest_asyncioWe install the AutoGen AgentChat package with Gemini support, the OpenAI extension for API compatibility, and the nest_asyncio library to patch the notebook’s event loop, ensuring you have all the components needed to run asynchronous, multi-agent workflows in Colab.

import os, nest_asyncio

from getpass import getpass

nest_asyncio.apply()

os.environ["GEMINI_API_KEY"] = getpass("Enter your Gemini API key: ")We import and apply nest_asyncio to enable nested event loops in notebook environments, then securely prompt for your Gemini API key using getpass and store it in os.environ for authenticated model client access.

from autogen_ext.models.openai import OpenAIChatCompletionClient

model_client = OpenAIChatCompletionClient(

model="gemini-1.5-flash-8b",

api_key=os.environ["GEMINI_API_KEY"],

api_type="google",

)

We initialize an OpenAI‐compatible chat client pointed at Google’s Gemini by specifying the gemini-1.5-flash-8b model, injecting your stored Gemini API key, and setting api_type=”google”, giving you a ready-to-use model_client for downstream AutoGen agents.

from autogen_agentchat.agents import AssistantAgent

researcher = AssistantAgent(name="Researcher", system_message="Gather and summarize factual info.", model_client=model_client)

factchecker = AssistantAgent(name="FactChecker", system_message="Verify facts and cite sources.", model_client=model_client)

critic = AssistantAgent(name="Critic", system_message="Critique clarity and logic.", model_client=model_client)

summarizer = AssistantAgent(name="Summarizer",system_message="Condense into a brief executive summary.", model_client=model_client)

editor = AssistantAgent(name="Editor", system_message="Polish language and signal APPROVED when done.", model_client=model_client)We define five specialized assistant agents, Researcher, FactChecker, Critic, Summarizer, and Editor, each initialized with a role-specific system message and the shared Gemini-powered model client, enabling them to gather information, respectively, verify accuracy, critique content, condense summaries, and polish language within the AutoGen workflow.

from autogen_agentchat.teams import RoundRobinGroupChat

from autogen_agentchat.conditions import MaxMessageTermination, TextMentionTermination

max_msgs = MaxMessageTermination(max_messages=20)

text_term = TextMentionTermination(text="APPROVED", sources=["Editor"])

termination = max_msgs | text_term

team = RoundRobinGroupChat(

participants=[researcher, factchecker, critic, summarizer, editor],

termination_condition=termination

)

We import the RoundRobinGroupChat class along with two termination conditions, then compose a stop rule that fires after 20 total messages or when the Editor agent mentions “APPROVED.” Finally, it instantiates a round-robin team of the five specialized agents with that combined termination logic, enabling them to cycle through research, fact-checking, critique, summarization, and editing until one of the stop conditions is met.

from autogen_agentchat.tools import TeamTool

deepdive_tool = TeamTool(team=team, name="DeepDive", description="Collaborative multi-agent deep dive")WE wrap our RoundRobinGroupChat team in a TeamTool named “DeepDive” with a human-readable description, effectively packaging the entire multi-agent workflow into a single callable tool that other agents can invoke seamlessly.

host = AssistantAgent(

name="Host",

model_client=model_client,

tools=[deepdive_tool],

system_message="You have access to a DeepDive tool for in-depth research."

)

We create a “Host” assistant agent configured with the shared Gemini-powered model_client, grant it the DeepDive team tool for orchestrating in-depth research, and prime it with a system message that informs it of its ability to invoke the multi-agent DeepDive workflow.

import asyncio

async def run_deepdive(topic: str):

result = await host.run(task=f"Deep dive on: {topic}")

print(" Read More

Read More

![[The AI Show Episode 148]: Microsoft’s Quiet AI Layoffs, US Copyright Office’s Bombshell AI Guidance, 2025 State of Marketing AI Report, and OpenAI Codex](https://www.marketingaiinstitute.com/hubfs/ep%20148%20cover%20%281%29.png)

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)