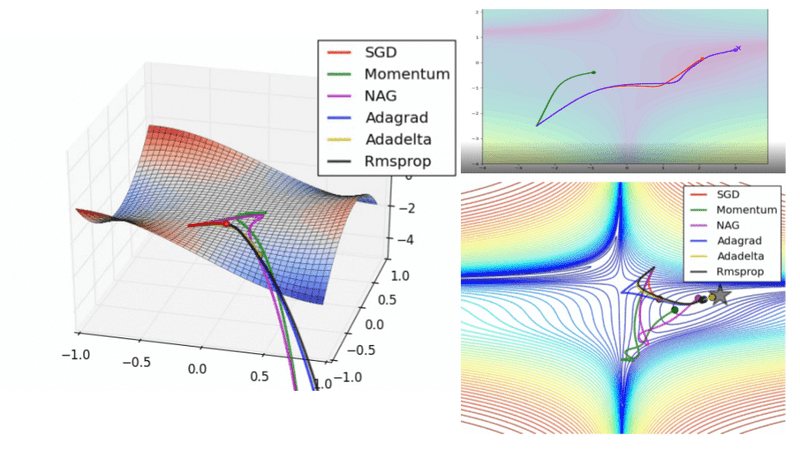

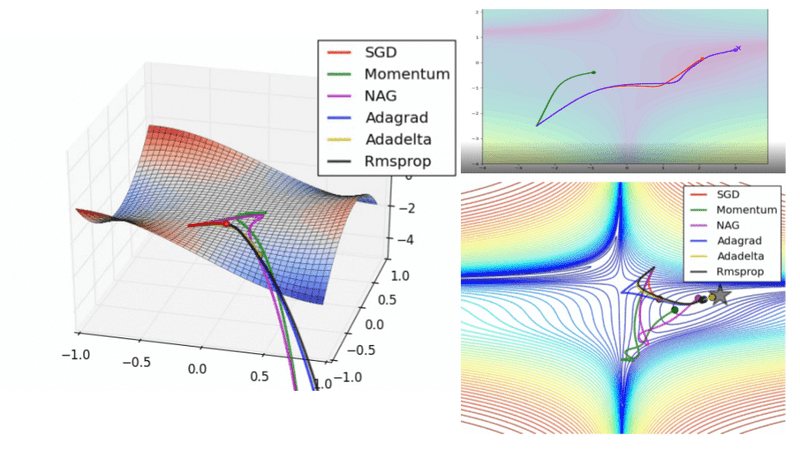

A journey into Optimization algorithms for Deep Neural Networks

An overview of the most popular optimization algorithms for training deep neural networks. From stohastic gradient descent to Adam, AdaBelief and second-order optimization

May 16, 2025 0

May 16, 2025 0

May 16, 2025 0

May 13, 2025 0

May 20, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 12, 2025 0

May 17, 2025 0

May 15, 2025 0

May 23, 2025 0

May 17, 2025 0

May 15, 2025 0

May 14, 2025 0

Or register with email

May 18, 2025 0

May 16, 2025 0

May 14, 2025 0

May 12, 2025 0

May 16, 2025 0

This site uses cookies. By continuing to browse the site you are agreeing to our use of cookies.