Zero-Shot Player Tracking in Tennis with Kalman Filtering

Automated tennis tracking without labels: GroundingDINO, Kalman filtering, and court homographyhttps://medium.com/media/6f735abc63f905de122bb8a0679f97fd/hrefWith the recent surge in sports tracking projects, many inspired by Skalski’s popular soccer tracking project, there’s been a notable shift towards using automated player tracking for sport hobbyists. Most of these approaches follow a familiar workflow: collect labeled data, train a YOLO model, project player coordinates onto an overhead view of the field or court, and use this tracking data to generate advanced analytics for potential competitive insights. However, in this project, we provide the tools to bypass the need for labeled data, relying instead on GroundingDINO’s zero-shot tracking capabilities in combination with a Kalman filter implementation to overcome noisy outputs from GroundingDino.Our dataset originates from a set of broadcast videos, publicly available under an MIT License thanks to Hayden Faulkner and team.¹ This data includes footage from various tennis matches during the 2012 Olympics at Wimbledon, we focus on a match between Serena Williams and Victoria Azarenka.https://medium.com/media/52659e7e9e29b83dcfe248555616f546/hrefGroundingDINO, for those not familiar, merges object detection with language allowing users to supply a prompt like “a tennis player” which then leads the model to return candidate object detection boxes that fit the description. RoboFlow has a great tutorial here for those interested in using it — but I have pasted some very basic code below as well. As seen below you can prompt the model to identify objects that would very rarely if ever be tagged in an object detection dataset like a dog’s tongue!from groundingdino.util.inference import load_model, load_image, predict, annotateBOX_TRESHOLD = 0.35TEXT_TRESHOLD = 0.25# processes the image to GroundingDino standardsimage_source, image = load_image("dog.jpg")prompt = "dog tongue, dog"boxes, logits, phrases = predict( model=model, image=image, caption=TEXT_PROMPT, box_threshold=BOX_TRESHOLD, text_threshold=TEXT_TRESHOLD)GroundingDino output when prompted with “Dog” and “Dog tongue.” Picture is owned by the author.However, distinguishing players on a professional tennis court isn’t as simple as prompting for “tennis players.” The model often misidentifies other individuals on the court, such as line judges, ball people, and other umpires, causing jumpy and inconsistent annotations. Additionally, the model sometimes fails to even detect the players in certain frames, leading to gaps and non-persistent boxes that don’t reliably appear in each frame.Tracking picks up a lines person in the first example and a ball person in the second. Image made by the author.To address these challenges, we apply a few targeted methods. First, we narrow down the detection boxes to just the top three probabilities from all possible boxes. Often, line judges have a higher probability score than players, which is why we don’t filter to only two boxes. However, this raises a new question: how can we automatically distinguish players from line judges in each frame?We observed that detection boxes for line and ball personnel typically have shorter time spans, often lasting just a few frames. Based on this, we hypothesize that by associating boxes across consecutive frames, we could filter out people that only appear briefly, thereby isolating the players.So how do we achieve this kind of association between objects across frames? Fortunately, the field of multi-object tracking has extensively studied this problem. Kalman filters are a mainstay in multi-object tracking, often combined with other identification metrics, such as color. For our purposes, a basic Kalman filter implementation is sufficient. In simple terms (for a deeper dive, check this article out), a Kalman filter is a method for probabilistically estimating an object’s position based on previous measurements. It’s particularly effective with noisy data but also works well associating objects across time in videos, even when detections are inconsistent such as when a player is not tracked every frame. We implement an entire Kalman filter here but will walk through some of the main steps in the following paragraphs.A Kalman filter state for 2 dimensions is quite simple as shown below. All we have to do is keep track of the x and y location as well as the objects velocity in both directions (we ignore acceleration).class KalmanStateVector2D: x: float y: float vx: float vy: floatThe Kalman filter operates in two steps: it first predicts an object’s location in the next frame, then updates this prediction based on a new measurement — in our case, from the object detector. However, in our example a new frame could have multiple new objects, or it could even drop objects that were present in the previous frame leading to the question of how we can associate boxes we have seen previously with those seen

Automated tennis tracking without labels: GroundingDINO, Kalman filtering, and court homography

https://medium.com/media/6f735abc63f905de122bb8a0679f97fd/hrefWith the recent surge in sports tracking projects, many inspired by Skalski’s popular soccer tracking project, there’s been a notable shift towards using automated player tracking for sport hobbyists. Most of these approaches follow a familiar workflow: collect labeled data, train a YOLO model, project player coordinates onto an overhead view of the field or court, and use this tracking data to generate advanced analytics for potential competitive insights. However, in this project, we provide the tools to bypass the need for labeled data, relying instead on GroundingDINO’s zero-shot tracking capabilities in combination with a Kalman filter implementation to overcome noisy outputs from GroundingDino.

Our dataset originates from a set of broadcast videos, publicly available under an MIT License thanks to Hayden Faulkner and team.¹ This data includes footage from various tennis matches during the 2012 Olympics at Wimbledon, we focus on a match between Serena Williams and Victoria Azarenka.https://medium.com/media/52659e7e9e29b83dcfe248555616f546/href

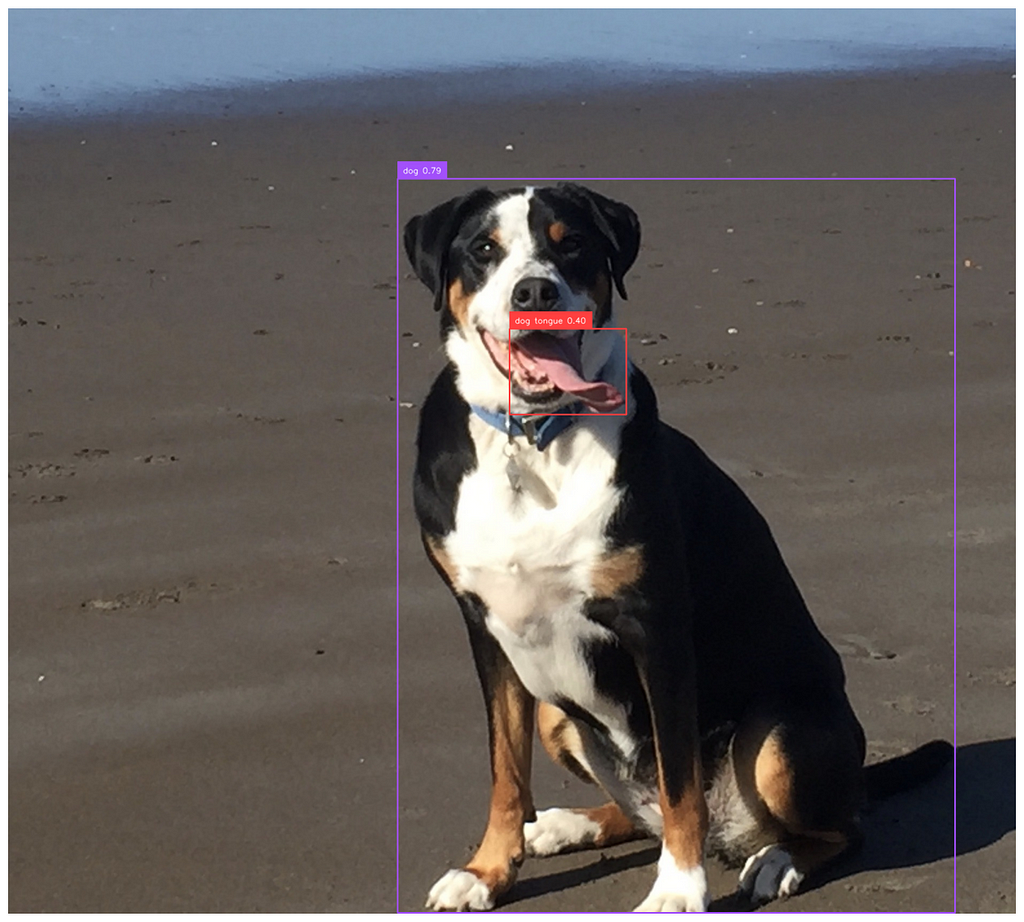

GroundingDINO, for those not familiar, merges object detection with language allowing users to supply a prompt like “a tennis player” which then leads the model to return candidate object detection boxes that fit the description. RoboFlow has a great tutorial here for those interested in using it — but I have pasted some very basic code below as well. As seen below you can prompt the model to identify objects that would very rarely if ever be tagged in an object detection dataset like a dog’s tongue!

from groundingdino.util.inference import load_model, load_image, predict, annotate

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

# processes the image to GroundingDino standards

image_source, image = load_image("dog.jpg")

prompt = "dog tongue, dog"

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

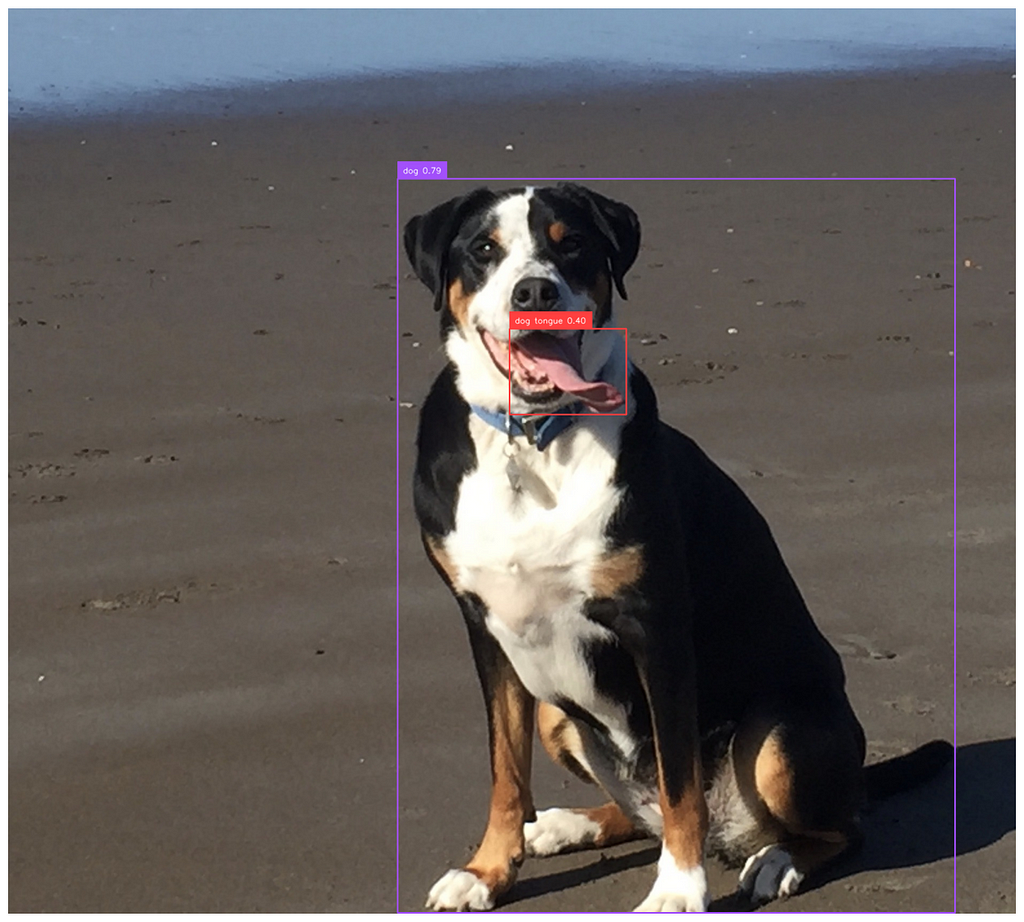

However, distinguishing players on a professional tennis court isn’t as simple as prompting for “tennis players.” The model often misidentifies other individuals on the court, such as line judges, ball people, and other umpires, causing jumpy and inconsistent annotations. Additionally, the model sometimes fails to even detect the players in certain frames, leading to gaps and non-persistent boxes that don’t reliably appear in each frame.

To address these challenges, we apply a few targeted methods. First, we narrow down the detection boxes to just the top three probabilities from all possible boxes. Often, line judges have a higher probability score than players, which is why we don’t filter to only two boxes. However, this raises a new question: how can we automatically distinguish players from line judges in each frame?

We observed that detection boxes for line and ball personnel typically have shorter time spans, often lasting just a few frames. Based on this, we hypothesize that by associating boxes across consecutive frames, we could filter out people that only appear briefly, thereby isolating the players.

So how do we achieve this kind of association between objects across frames? Fortunately, the field of multi-object tracking has extensively studied this problem. Kalman filters are a mainstay in multi-object tracking, often combined with other identification metrics, such as color. For our purposes, a basic Kalman filter implementation is sufficient. In simple terms (for a deeper dive, check this article out), a Kalman filter is a method for probabilistically estimating an object’s position based on previous measurements. It’s particularly effective with noisy data but also works well associating objects across time in videos, even when detections are inconsistent such as when a player is not tracked every frame. We implement an entire Kalman filter here but will walk through some of the main steps in the following paragraphs.

A Kalman filter state for 2 dimensions is quite simple as shown below. All we have to do is keep track of the x and y location as well as the objects velocity in both directions (we ignore acceleration).

class KalmanStateVector2D:

x: float

y: float

vx: float

vy: float

The Kalman filter operates in two steps: it first predicts an object’s location in the next frame, then updates this prediction based on a new measurement — in our case, from the object detector. However, in our example a new frame could have multiple new objects, or it could even drop objects that were present in the previous frame leading to the question of how we can associate boxes we have seen previously with those seen currently.

We choose to do this by using the Mahalanobis distance, coupled with a chi-squared test, to assess the probability that a current detection matches a past object. Additionally, we keep a queue of past objects so we have a longer ‘memory’ than just one frame. Specifically, our memory stores the trajectory of any object seen over the last 30 frames. Then for each object we find in a new frame we iterate over our memory and find the previous object most likely to be a match with the current given by the probability given from the Mahalanbois distance. However, it’s possible we are seeing an entirely new object as well, in which case we should add a new object to our memory. If any object has <30% probability of being associated with any box in our memory we add it to our memory as a new object.

We provide our full Kalman filter below for those preferring code.

from dataclasses import dataclass

import numpy as np

from scipy import stats

class KalmanStateVectorNDAdaptiveQ:

states: np.ndarray # for 2 dimensions these are [x, y, vx, vy]

cov: np.ndarray # 4x4 covariance matrix

def __init__(self, states: np.ndarray) -> None:

self.state_matrix = states

self.q = np.eye(self.state_matrix.shape[0])

self.cov = None

# assumes a single step transition

self.f = np.eye(self.state_matrix.shape[0])

# divide by 2 as we have a velocity for each state

index = self.state_matrix.shape[0] // 2

self.f[:index, index:] = np.eye(index)

def initialize_covariance(self, noise_std: float) -> None:

self.cov = np.eye(self.state_matrix.shape[0]) * noise_std**2

def predict_next_state(self, dt: float) -> None:

self.state_matrix = self.f @ self.state_matrix

self.predict_next_covariance(dt)

def predict_next_covariance(self, dt: float) -> None:

self.cov = self.f @ self.cov @ self.f.T + self.q

def __add__(self, other: np.ndarray) -> np.ndarray:

return self.state_matrix + other

def update_q(

self, innovation: np.ndarray, kalman_gain: np.ndarray, alpha: float = 0.98

) -> None:

innovation = innovation.reshape(-1, 1)

self.q = (

alpha * self.q

+ (1 - alpha) * kalman_gain @ innovation @ innovation.T @ kalman_gain.T

)

class KalmanNDTrackerAdaptiveQ:

def __init__(

self,

state: KalmanStateVectorNDAdaptiveQ,

R: float, # R

Q: float, # Q

h: np.ndarray = None,

) -> None:

self.state = state

self.state.initialize_covariance(Q)

self.predicted_state = None

self.previous_states = []

self.h = np.eye(self.state.state_matrix.shape[0]) if h is None else h

self.R = np.eye(self.h.shape[0]) * R**2

self.previous_measurements = []

self.previous_measurements.append(

(self.h @ self.state.state_matrix).reshape(-1, 1)

)

def predict(self, dt: float) -> None:

self.previous_states.append(self.state)

self.state.predict_next_state(dt)

def update_covariance(self, gain: np.ndarray) -> None:

self.state.cov -= gain @ self.h @ self.state.cov

def update(

self, measurement: np.ndarray, dt: float = 1, predict: bool = True

) -> None:

"""Measurement will be a x, y position"""

self.previous_measurements.append(measurement)

assert dt == 1, "Only single step transitions are supported due to F matrix"

if predict:

self.predict(dt=dt)

innovation = measurement - self.h @ self.state.state_matrix

gain_invertible = self.h @ self.state.cov @ self.h.T + self.R

gain_inverse = np.linalg.inv(gain_invertible)

gain = self.state.cov @ self.h.T @ gain_inverse

new_state = self.state.state_matrix + gain @ innovation

self.update_covariance(gain)

self.state.update_q(innovation, gain)

self.state.state_matrix = new_state

def compute_mahalanobis_distance(self, measurement: np.ndarray) -> float:

innovation = measurement - self.h @ self.state.state_matrix

return np.sqrt(

innovation.T

@ np.linalg.inv(

self.h @ self.state.cov @ self.h.T + self.R

)

@ innovation

)

def compute_p_value(self, distance: float) -> float:

return 1 - stats.chi2.cdf(distance, df=self.h.shape[0])

def compute_p_value_from_measurement(self, measurement: np.ndarray) -> float:

"""Returns the probability that the measurement is consistent with the predicted state"""

distance = self.compute_mahalanobis_distance(measurement)

return self.compute_p_value(distance)

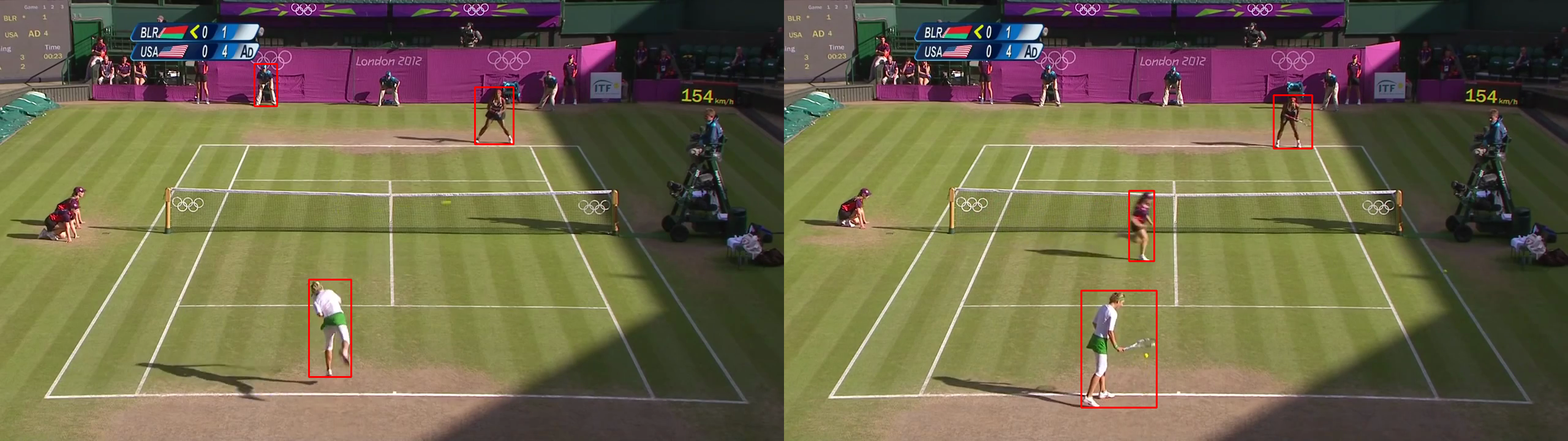

Having tracked every detected object over the past 30 frames, we can now devise heuristics to pinpoint which boxes most likely represent our players. We tested two approaches: selecting the boxes nearest the center of the baseline, and picking those with the longest observed history in our memory. Empirically, the first strategy often flagged line judges as players whenever the actual player moved away from the baseline, making it less reliable. Meanwhile, we noticed that GroundingDino tends to “flicker” between different line judges and ball people, while genuine players maintain a somewhat stable presence. As a result, our final rule is to pick the boxes in our memory with the longest tracking history as the true players. As you can see in the initial video, it’s surprisingly effective for such a simple rule!

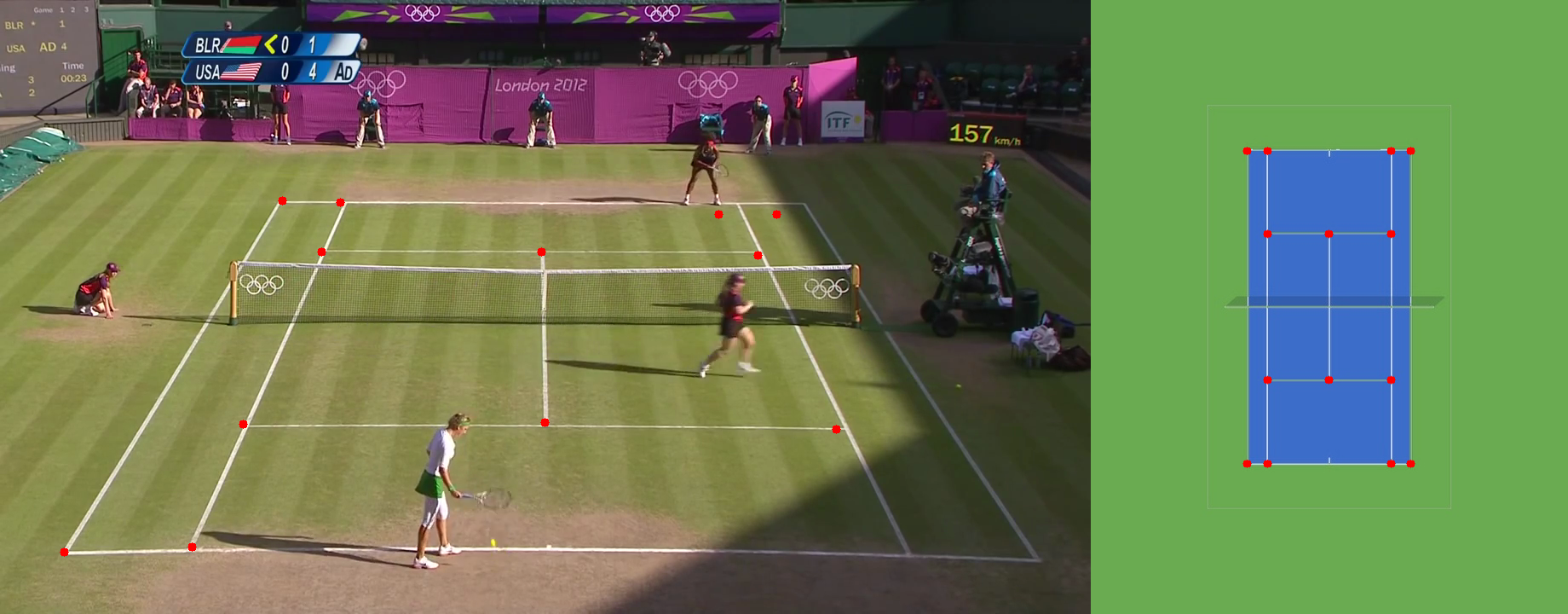

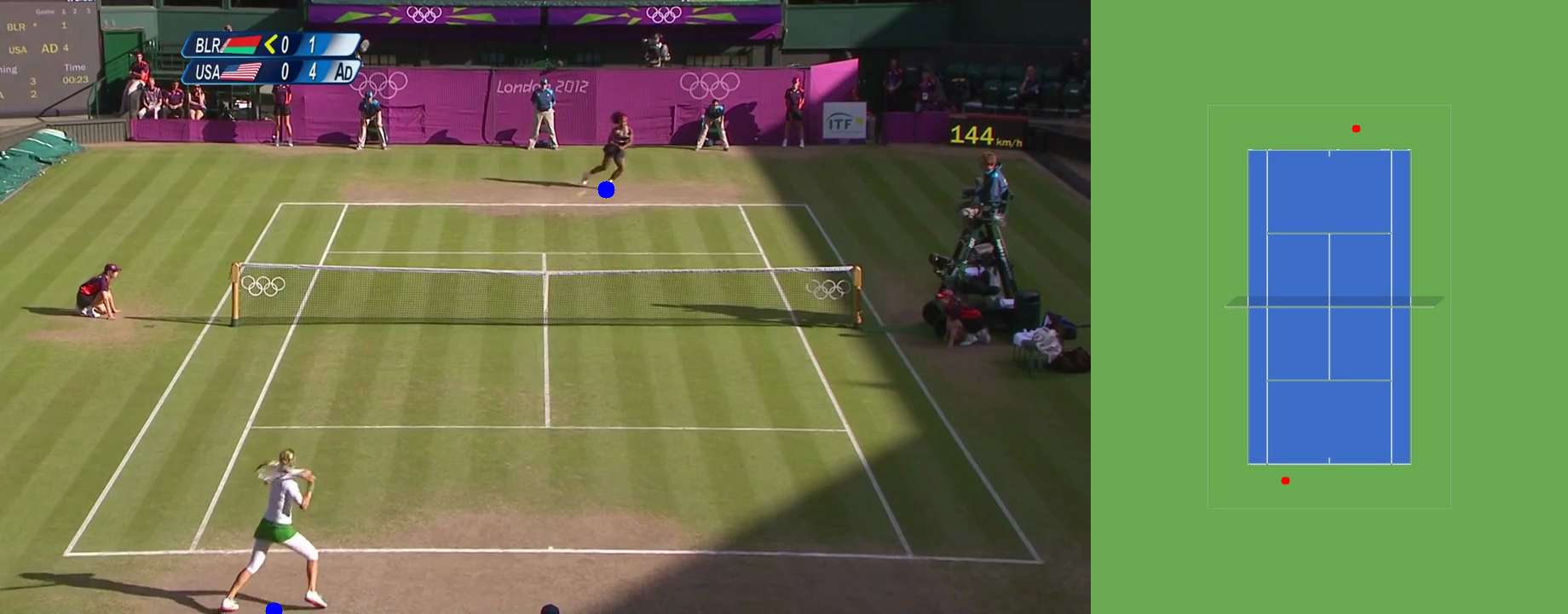

With our tracking system now established on the image, we can move toward a more traditional analysis by tracking players from a bird’s-eye perspective. This viewpoint enables the evaluation of key metrics, such as total distance traveled, player speed, and court positioning trends. For example, we could analyze whether a player frequently targets their opponent’s backhand based on location during a point. To accomplish this, we need to project the player coordinates from the image onto a standardized court template viewed from above, aligning the perspective for spatial analysis.

This is where homography comes into play. Homography describes the mapping between two surfaces, which, in our case, means mapping the points on our original image to an overhead court view. By identifying a few keypoints in the original image — such as line intersections on a court — we can calculate a homography matrix that translates any point to a bird’s-eye view. To create this homography matrix, we first need to identify these ‘keypoints.’ Various open-source, permissively licensed models on platforms like RoboFlow can help detect these points, or we can label them ourselves on a reference image to use in the transformation.

After labeling these keypoints, the next step is to match them with corresponding points on a reference court image to generate a homography matrix. Using OpenCV, we can then create this transformation matrix with a few simple lines of code!

import numpy as np

import cv2

# order of the points matters

source = np.array(keypoints) # (n, 2) matrix

target = np.array(court_coords) # (n, 2) matrix

m, _ = cv2.findHomography(source, target)

With the homography matrix in hand, we can map any point from our image onto the reference court. For this project, our focus is on the player’s position on the court. To determine this, we take the midpoint at the base of each player’s bounding box, using it as their location on the court in the bird’s-eye view.

In summary, this project demonstrates how we can use GroundingDINO’s zero-shot capabilities to track tennis players without relying on labeled data, transforming complex object detection into actionable player tracking. By tackling key challenges — such as distinguishing players from other on-court personnel, ensuring consistent tracking across frames, and mapping player movements to a bird’s-eye view of the court — we’ve laid the groundwork for a robust tracking pipeline all without the need for explicit labels.

This approach doesn’t just unlock insights like distance traveled, speed, and positioning but also opens the door to deeper match analytics, such as shot targeting and strategic court coverage. With further refinement, including distilling a YOLO or RT-DETR model from GroundingDINO outputs, we could even develop a real-time tracking system that rivals existing commercial solutions, providing a powerful tool for both coaching and fan engagement in the world of tennis.

- @inproceedings{faulkner2017tenniset,

title={TenniSet: A Dataset for Dense Fine-Grained Event Recognition, Localisation and Description},

author={Faulkner, Hayden and Dick, Anthony},

booktitle={2017 International Conference on Digital Image Computing: Techniques and Applications (DICTA)},

pages={1–8},

organization={IEEE}

}

Zero-Shot Player Tracking in Tennis with Kalman Filtering was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25336775/STK051_TIKTOKBAN_CVirginia_D.jpg)