Optimize the dbt Doc Function with a CI

How to set an automated check to improve your dbt documentationImage by the author (AI generated)In large dbt projects, maintaining consistent and up-to-date documentation can be a challenge. Although dbt’s {{ doc() }} function allows you to store and reuse descriptions for the columns of your models, ensuring its usage remains quite a manual process and prone to mistakes, and therefore can easily lead to incomplete or outdated documentation. In this article, we’ll explore how to automate the validation of your dbt documentation using a custom Continuous Integration (CI) workflow in GitHub Actions.I already wrote about the doc feature in dbt and how it helps create consistent and accurate documentation across the entire dbt project (see this). In short, you can store the description of the most common/important columns used in the data models in your project by adding them in the docs.md files, which live in the docs folder of your dbt project.A very simple example of a orders.md file that contains the description for the most common customer-related column names:# Fields description## order_id{% docs orders__order_id %}Unique alphanumeric identifier of the order, used to join all order dimension tables{% enddocs %}## order_country{% docs orders__order_country %}Country where the order was placed. Format is country ISO 3166 code.{% enddocs %}## order_value{% docs orders__value %}Total value of the order in local currency. {% enddocs %}## order_date{% docs orders__date %}Date of the order in local timezone{% enddocs %}And its usage in the .yml file of a model: columns: - name: order_id description: '{{ doc("orders__order_id") }}'When the dbt docs are generated the description of order_id will be always the same, as long as the doc function is used in the yml file of the model. The benefit of having this centralized documentation is clear and undeniable.The challengeHowever, especially with large projects and frequent changes (new models, or changes to existing ones), it is likely that the repository’s contributors will either forget to use the doc function, or they are not aware that a specific column has been added to the docs folder. This has two consequences:someone must catch this during PR review and request a change — assuming there’s at least one reviewer who either knows all the documented columns by heart or always checks the docs folder manuallyif it’s easy to go unnoticed and relies on individuals, this setup defeats the purpose of having a centralized documentation.The solutionThe simple answer to this problem is a CI (continuous integration) check, that combines a GitHub workflow with a python script. This check fails if:the changes in the PR are affecting a .yml file that contains a column name present in the docs, but the doc function is not used for that columnthe changes in the PR are affecting a .yml file that contains a column name present in the docs, but that column has no description at allLet’s have a closer look at the necessary code and files to run this check, and to a couple of examples. As previously mentioned, there are two things to consider: a (1) .yml file for the workflow and a (2) python file for the actual validation check.(1) This is how the validation_docs file looks like. It is placed in the github/workflows folder.name: Validate Documentationon: pull_request: types: [opened, synchronize, reopened]jobs: validate_docs: runs-on: ubuntu-latest steps: - name: Check out repository code uses: actions/checkout@v3 with: fetch-depth: 0 - name: Install PyYAML run: pip install pyyaml - name: Run validation script run: python validate_docs.pyThe workflow will run whenever a pull request is open or re-open, and every time that a new commit is pushed to the remote branch. Then there are basically 3 steps: retrieving the repository’s files for the current pull request, install the dependencies, and run the validation script.(2). Then the validate_docs.py script, placed in the root folder of your dbt project repository, that looks like thisimport osimport sysimport yamlimport refrom glob import globfrom pathlib import Pathimport subprocessdef get_changed_files(): diff_command = ['git', 'diff', '--name-only', 'origin/main...'] result = subprocess.run(diff_command, capture_output=True, text=True) changed_files = result.stdout.strip().split('\n') return changed_filesdef extract_doc_names(): doc_names = set() md_files = glob('docs/**/*.md', recursive=True) doc_pattern = re.compile(r'\{%\s*docs\s+([^\s%]+)\s*%}') for md_file in md_files: with open(md_file, 'r') as f: content = f.read() matches = doc_pattern.findall(content) doc_names.update(matches) print(f"Extracted doc names: {doc_names}") return doc_namesdef parse_yaml_file(yaml_path): with open(yaml_path, 'r') as f: try: return list(yaml.safe_load_all(f)) excep

How to set an automated check to improve your dbt documentation

In large dbt projects, maintaining consistent and up-to-date documentation can be a challenge. Although dbt’s {{ doc() }} function allows you to store and reuse descriptions for the columns of your models, ensuring its usage remains quite a manual process and prone to mistakes, and therefore can easily lead to incomplete or outdated documentation. In this article, we’ll explore how to automate the validation of your dbt documentation using a custom Continuous Integration (CI) workflow in GitHub Actions.

I already wrote about the doc feature in dbt and how it helps create consistent and accurate documentation across the entire dbt project (see this). In short, you can store the description of the most common/important columns used in the data models in your project by adding them in the docs.md files, which live in the docs folder of your dbt project.

A very simple example of a orders.md file that contains the description for the most common customer-related column names:

# Fields description

## order_id

{% docs orders__order_id %}

Unique alphanumeric identifier of the order, used to join all order dimension tables

{% enddocs %}

## order_country

{% docs orders__order_country %}

Country where the order was placed. Format is country ISO 3166 code.

{% enddocs %}

## order_value

{% docs orders__value %}

Total value of the order in local currency.

{% enddocs %}

## order_date

{% docs orders__date %}

Date of the order in local timezone

{% enddocs %}

And its usage in the .yml file of a model:

columns:

- name: order_id

description: '{{ doc("orders__order_id") }}'

When the dbt docs are generated the description of order_id will be always the same, as long as the doc function is used in the yml file of the model. The benefit of having this centralized documentation is clear and undeniable.

The challenge

However, especially with large projects and frequent changes (new models, or changes to existing ones), it is likely that the repository’s contributors will either forget to use the doc function, or they are not aware that a specific column has been added to the docs folder. This has two consequences:

- someone must catch this during PR review and request a change — assuming there’s at least one reviewer who either knows all the documented columns by heart or always checks the docs folder manually

- if it’s easy to go unnoticed and relies on individuals, this setup defeats the purpose of having a centralized documentation.

The solution

The simple answer to this problem is a CI (continuous integration) check, that combines a GitHub workflow with a python script. This check fails if:

- the changes in the PR are affecting a .yml file that contains a column name present in the docs, but the doc function is not used for that column

- the changes in the PR are affecting a .yml file that contains a column name present in the docs, but that column has no description at all

Let’s have a closer look at the necessary code and files to run this check, and to a couple of examples. As previously mentioned, there are two things to consider: a (1) .yml file for the workflow and a (2) python file for the actual validation check.

(1) This is how the validation_docs file looks like. It is placed in the github/workflows folder.

name: Validate Documentation

on:

pull_request:

types: [opened, synchronize, reopened]

jobs:

validate_docs:

runs-on: ubuntu-latest

steps:

- name: Check out repository code

uses: actions/checkout@v3

with:

fetch-depth: 0

- name: Install PyYAML

run: pip install pyyaml

- name: Run validation script

run: python validate_docs.py

The workflow will run whenever a pull request is open or re-open, and every time that a new commit is pushed to the remote branch. Then there are basically 3 steps: retrieving the repository’s files for the current pull request, install the dependencies, and run the validation script.

(2). Then the validate_docs.py script, placed in the root folder of your dbt project repository, that looks like this

import os

import sys

import yaml

import re

from glob import glob

from pathlib import Path

import subprocess

def get_changed_files():

diff_command = ['git', 'diff', '--name-only', 'origin/main...']

result = subprocess.run(diff_command, capture_output=True, text=True)

changed_files = result.stdout.strip().split('\n')

return changed_files

def extract_doc_names():

doc_names = set()

md_files = glob('docs/**/*.md', recursive=True)

doc_pattern = re.compile(r'\{%\s*docs\s+([^\s%]+)\s*%}')

for md_file in md_files:

with open(md_file, 'r') as f:

content = f.read()

matches = doc_pattern.findall(content)

doc_names.update(matches)

print(f"Extracted doc names: {doc_names}")

return doc_names

def parse_yaml_file(yaml_path):

with open(yaml_path, 'r') as f:

try:

return list(yaml.safe_load_all(f))

except yaml.YAMLError as exc:

print(f"Error parsing YAML file {yaml_path}: {exc}")

return []

def validate_columns(columns, doc_names, errors, model_name):

for column in columns:

column_name = column.get('name')

description = column.get('description', '')

print(f"Validating column '{column_name}' in model '{model_name}'")

print(f"Description: '{description}'")

doc_usage = re.findall(r'\{\{\s*doc\(["\']([^"\']+)["\']\)\s*\}\}', description)

print(f"Doc usage found: {doc_usage}")

if doc_usage:

for doc_name in doc_usage:

if doc_name not in doc_names:

errors.append(

f"Column '{column_name}' in model '{model_name}' references undefined doc '{doc_name}'."

)

else:

matching_docs = [dn for dn in doc_names if dn.endswith(f"__{column_name}")]

if matching_docs:

suggested_doc = matching_docs[0]

errors.append(

f"Column '{column_name}' in model '{model_name}' should use '{{{{ doc(\"{suggested_doc}\") }}}}' in its description."

)

else:

print(f"No matching doc found for column '{column_name}'")

def main():

changed_files = get_changed_files()

yaml_files = [f for f in changed_files if f.endswith('.yml') or f.endswith('.yaml')]

doc_names = extract_doc_names()

errors = []

for yaml_file in yaml_files:

if not os.path.exists(yaml_file):

continue

yaml_content = parse_yaml_file(yaml_file)

for item in yaml_content:

if not isinstance(item, dict):

continue

models = item.get('models') or item.get('sources')

if not models:

continue

for model in models:

model_name = model.get('name')

columns = model.get('columns', [])

validate_columns(columns, doc_names, errors, model_name)

if errors:

print("Documentation validation failed with the following errors:")

for error in errors:

print(f"- {error}")

sys.exit(1)

else:

print("All documentation validations passed.")

if __name__ == "__main__":

main()

Let’s summarise the steps in the script:

- it lists all files that have been changed in the pull request compared to the origin branch.

- it looks through all markdown (.md) files within the docs folder (including subdirectories) and it searches for special documentation block patterns using a regex. Each time it finds such a pattern, it extracts the doc_name part and adds it to a set of doc names.

- for each changed .yml file, the script opens and parses it using yaml.safe_load_all. This converts the .yml content into Python dictionaries (or lists) for easy analysis.

- validate_columns: for each columns defined in the .yml files, it checks the description field to see if it includes a {{ doc() }} reference. If references are found, it verifies that the referenced doc name actually exists in the set of doc names extracted earlier. If not, it reports an error. If no doc references are found, it attempts to see if there is a doc block that matches this column’s name. Note that here we are using a naming convention like doc_block__column_name. If such a block exists, it suggests that the column description should reference this doc.

Any problems (missing doc references, non-existent referenced docs) are recorded as errors.

Examples

Now, let’s have a look at the CI in action. Given the orders.md file shared at the beginning of the article, we now push to remote this commit that contains the ref_country_orders.yml file:

version: 2

models:

- name: ref_country_orders

description: >

This model filters orders from the staging orders table to include only those with an order date on or after January 1, 2020.

It includes information such as the order ID, order country, order value, and order date.

columns:

- name: order_id

description: '{{ doc("orders__order_id") }}'

- name: order_country

description: The country where the order was placed.

- name: order_value

description: The value of the order.

- name: order_address

description: The address where the order was placed.

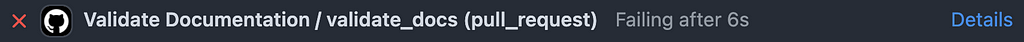

- name: order_date

The CI has failed. Clicking on the Details will take us to the log of the CI, where we see this:

Validating column 'order_id' in model 'ref_country_orders'

Description: '{{ doc("orders__order_id") }}'

Doc usage found: ['orders__order_id']

Validating column 'order_country' in model 'ref_country_orders'

Description: 'The country where the order was placed.'

Doc usage found: []

Validating column 'order_value' in model 'ref_country_orders'

Description: 'The value of the order.'

Doc usage found: []

Validating column 'order_address' in model 'ref_country_orders'

Description: 'The address where the order was placed.'

Doc usage found: []

No matching doc found for column 'order_address'

Validating column 'order_date' in model 'ref_country_orders'

Description: ''

Doc usage found: []

Let’s analyze the log:

- for the order_id column it found the doc usage in its description.

- the order_address column isn’t found in the docs file, so it returns a No matching doc found for column ‘order_address’

- for the order_value and order_country, it knows that they are listed in the docs but the doc usage is empty. Same for the order_date, and note that for this one we didn’t even add a description line

All good so far. But let’s keep looking at the log:

Documentation validation failed with the following errors:

- Column 'order_country' in model 'ref_country_orders' should use '{{ doc("orders__order_country") }}' in its description.

- Column 'order_value' in model 'ref_country_orders' should use '{{ doc("orders__order_value") }}' in its description.

- Column 'order_date' in model 'ref_country_orders' should use '{{ doc("orders__order_date") }}' in its description.

Error: Process completed with exit code 1.

Since order_country, order_value, order_date are in the docs file, but the doc function isn’t used, the CI raise an error. And it suggests the actual value to add in the description, which makes it extremely easy for the PR author to copy-paste the correct description value from the CI log and add it into the .yml file.

After pushing the new changes the CI check was succesfull and the log now looks like this:

Validating column 'order_id' in model 'ref_country_orders'

Description: '{{ doc("orders__order_id") }}'

Doc usage found: ['orders__order_id']

Validating column 'order_country' in model 'ref_country_orders'

Description: '{{ doc("orders__order_country") }}'

Doc usage found: ['orders__order_country']

Validating column 'order_value' in model 'ref_country_orders'

Description: '{{ doc("orders__order_value") }}'

Doc usage found: ['orders__order_value']

Validating column 'order_address' in model 'ref_country_orders'

Description: 'The address where the order was placed.'

Doc usage found: []

No matching doc found for column 'order_address'

Validating column 'order_date' in model 'ref_country_orders'

Description: '{{ doc("orders__order_date") }}'

Doc usage found: ['orders__order_date']

All documentation validations passed.

For the order_address column, the log shows that no matching doc was found. However, that’s fine and does not cause the CI to fail, since adding that column to the docs file is not our intention for this demonstration. Meanwhile, the rest of the columns are listed in the docs and are correctly using the {{ doc() }} function

In conclusion, by integrating this validation CI into your dbt repository, you can confidently maintain a centralised source of truth for column descriptions across your entire project and make the best out of the {{ doc() }} feature in dbt. This setup can save valuable review time, reduces human error, and upholds a higher standard of documentation quality. As your project grows, you’ll find that this automated approach to documentation management is both scalable and maintainable, ultimately enabling your team to focus on analytics rather than debugging inconsistent docs.

Optimize the dbt Doc Function with a CI was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

What's Your Reaction?