Speed Up PyTorch With Custom Kernels. But It Gets Progressively Darker

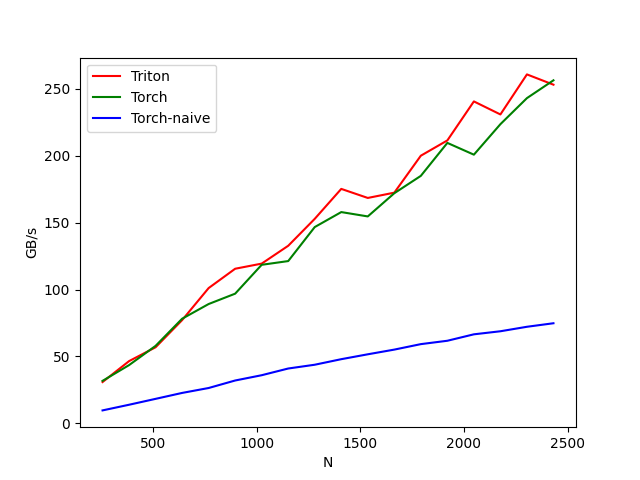

Speed Up PyTorch with Custom KernelsWe’ll begin with torch.compile, move on to writing a custom Triton kernel, and finally dive into designing a CUDA kernelRead for free at alexdremov.mePyTorch offers remarkable flexibility, allowing you to code complex GPU-accelerated operations in a matter of seconds. However, this convenience comes at a cost. PyTorch executes your code sequentially, resulting in suboptimal performance. This translates into slower model training, which impacts the iteration cycle of your experiments, the robustness of your team, the financial implications, and so on.In this post, I’ll explore three strategies for accelerating your PyTorch operations. Each method uses softmax as our “Hello World” demonstration, but you can swap it with any function you like, and the discussed methods would still apply.We’ll begin with torch.compile, move on to writing a custom Triton kernel, and finally dive into designing a CUDA kernel.So, this post may get complicated, but bear with me.torch.compile — A Quick Way to Boost Performance

Speed Up PyTorch with Custom Kernels

We’ll begin with torch.compile, move on to writing a custom Triton kernel, and finally dive into designing a CUDA kernel

Read for free at alexdremov.me

PyTorch offers remarkable flexibility, allowing you to code complex GPU-accelerated operations in a matter of seconds. However, this convenience comes at a cost. PyTorch executes your code sequentially, resulting in suboptimal performance. This translates into slower model training, which impacts the iteration cycle of your experiments, the robustness of your team, the financial implications, and so on.

In this post, I’ll explore three strategies for accelerating your PyTorch operations. Each method uses softmax as our “Hello World” demonstration, but you can swap it with any function you like, and the discussed methods would still apply.

We’ll begin with torch.compile, move on to writing a custom Triton kernel, and finally dive into designing a CUDA kernel.

So, this post may get complicated, but bear with me.