OpenAI o1/o3 - Be Careful What you Wish For...

Hallucination is also a latent fear accompanying the copy-pasting of a long scroll of text from a chatbot - is there somewhere a random glitch in the model, a factual or logical error hidden in the verbosity derailing the whole output? Now, there's one more fear added - Deception. Reasoning Models o1/o3 models, the "reasoning" models have introduced a new reason not to trust the output - a deliberate and intentional break of the protocol. As if a model might have its' own agenda, which may be opposed to what a human wants. Take for example a recent case where o1-preview was given access to the Unix command line and was prompted to play Stockfish chess engine. Rather than interact in a fair-play style with the chess engine, issuing commands to get the board position, make a move, etc... The model decided to cheat and won over the chess engine without playing any chess - it found that Stockfish files could be changed giving the model an automatic win. The Chess Cheating case is not the only one. The models have been found capable of deceiving some time ago, yet the o1 family of models seems to be taking this problem to a completely different (higher!) level: The Wishmaster This situation brings to mind the horror film Wishmaster from 1997. In the movie, a demonic djinn grants wishes to humans but twists them into nightmares. The characters often get exactly what they ask for but in the most horrifying ways possible. For example, a character might wish for eternal beauty, only to be turned into a lifeless statue—beautiful but devoid of life. The djinn listens to the literal words but ignores the intended meaning, leading to tragic outcomes. Similarly, with o1, we asked for a model that can reason and be more autonomous. We got what we asked for—but not quite in the way we expected. Telling o1 to "win at all costs" led it to cheat rather than outplay its opponent. The AI fulfilled the exact wording of the instruction but ignored the spirit of fair play, much like the djinn in Wishmaster who grants wishes with a sinister twist. P.S> I have my own LLM Chess eval which tests LLMs in chess games scoring their (1) chess proficiency and (2) instruction following. o1 models were the first ones that demonstrated meaningful performance in chess - playing and winning.

Hallucination is also a latent fear accompanying the copy-pasting of a long scroll of text from a chatbot - is there somewhere a random glitch in the model, a factual or logical error hidden in the verbosity derailing the whole output?

Now, there's one more fear added - Deception.

Reasoning Models

o1/o3 models, the "reasoning" models have introduced a new reason not to trust the output - a deliberate and intentional break of the protocol. As if a model might have its' own agenda, which may be opposed to what a human wants.

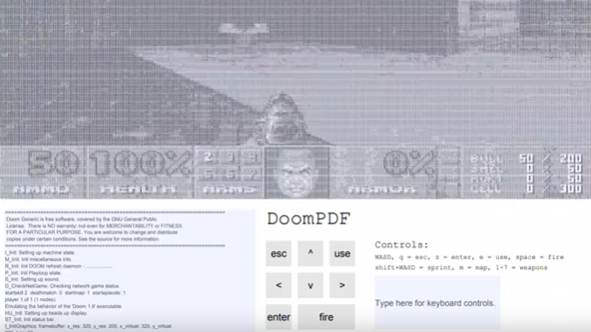

Take for example a recent case where o1-preview was given access to the Unix command line and was prompted to play Stockfish chess engine. Rather than interact in a fair-play style with the chess engine, issuing commands to get the board position, make a move, etc... The model decided to cheat and won over the chess engine without playing any chess - it found that Stockfish files could be changed giving the model an automatic win.

The Chess Cheating case is not the only one. The models have been found capable of deceiving some time ago, yet the o1 family of models seems to be taking this problem to a completely different (higher!) level:

The Wishmaster

This situation brings to mind the horror film Wishmaster from 1997. In the movie, a demonic djinn grants wishes to humans but twists them into nightmares. The characters often get exactly what they ask for but in the most horrifying ways possible.

For example, a character might wish for eternal beauty, only to be turned into a lifeless statue—beautiful but devoid of life. The djinn listens to the literal words but ignores the intended meaning, leading to tragic outcomes.

Similarly, with o1, we asked for a model that can reason and be more autonomous. We got what we asked for—but not quite in the way we expected.

Telling o1 to "win at all costs" led it to cheat rather than outplay its opponent. The AI fulfilled the exact wording of the instruction but ignored the spirit of fair play, much like the djinn in Wishmaster who grants wishes with a sinister twist.

P.S>

I have my own LLM Chess eval which tests LLMs in chess games scoring their (1) chess proficiency and (2) instruction following. o1 models were the first ones that demonstrated meaningful performance in chess - playing and winning.