Getting Started With Kubernetes: A beginner's guide

Introduction In today’s world of software development, applications are no longer created as one single large program. Instead, they’re broken into smaller pieces or units called microservices, which run inside containers. But as you start running more containers, managing them can quickly become challenging. That’s where Kubernetes comes in. This article introduces you to Kubernetes, covering what it is, explains why it’s essential, and how to get started with it! Prerequisites A basic understanding on Containers and Docker basics, for this, you can check out my article on Understanding Docker: A beginner's guide to containerization Basic Command Line Knowledge, you should be comfortable using the terminal or command line interface (CLI) YAML basics and that's because Kubernetes configurations are written in YAML. Basic Networking Concepts. You should understand simple networking ideas, what an IP address is, and how ports work. A Willingness to Learn. Kubernetes introduces some new concepts, like pods, services, and deployments so having patience and a mindset to experiment will make the learning process enjoyable. Now, let's dive right in! What Is Kubernetes? Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform developed by Google. It's designed to automate the deployment, scaling, and management of containerized applications. With Kubernetes, developers can focus on writing code, while the platform handles the complexities of deploying and managing those applications in production. Why Use Kubernetes? As applications become more complex and microservices-based architectures gain popularity, the need for efficient management tools has increased. Here are some reasons to consider using Kubernetes: Scalability: Kubernetes provides powerful scaling options. You can easily scale your application up or down based on traffic or resource demand. Load Balancing: It automatically distributes network traffic across multiple containers, ensuring no single container is overloaded. Self-Healing: Kubernetes detects when a container fails and automatically restarts it or schedules it on a different node, ensuring high availability. Service Discovery: It simplifies the process of managing communication between services, allowing them to find each other easily. Seamless Updates: Kubernetes allows you to update your app with zero downtime. Key Terms and Components in Kubernetes When understanding Kubernetes, you're required to be familiar with these terms or core components. Here’s a breakdown of its key concepts: 1. Cluster A Kubernetes cluster is a set of machines (called nodes) that run containerized applications. Each cluster has at least one master node and multiple worker nodes. 2. Node A node is a physical or virtual machine in the cluster that runs applications. There are two types of nodes: Master Node: Manages the cluster, coordinating tasks and distributing workloads to worker nodes. Worker Node: Executes the applications and services. 3. Pod A pod is the smallest deployable unit in Kubernetes. It can contain one or more containers that share storage and network resources. Pods are typically used to run tightly coupled applications. 4. Deployment A deployment is a Kubernetes resource that manages the lifecycle of your application. It defines how many replicas (copies) of a pod should be running at any given time and ensures that the desired state of the application is maintained. 5. Service A service is an abstract way to expose an application running on a set of pods. It provides a stable endpoint for users to access the application and can handle load balancing and failover. 6. ConfigMap and Secret ConfigMap: Used to store non-sensitive configuration data for your application. Secret: Used to store sensitive information, such as passwords or API keys, ensuring they are securely managed. How Kubernetes Works Let’s walk through an example: You have a web app and want to run it in 3 containers. You create a deployment in Kubernetes, specifying the app’s configuration and that you want 3 replicas. Kubernetes starts 3 pods (each containing your app’s container) on the available worker nodes. A service is created to expose your app to users. If one pod fails, Kubernetes automatically starts a new one to maintain 3 replicas. Getting Started With Kubernetes There are various ways to install Kubernetes, depending on your environment and requirements. Here are a few popular options for beginners: 1. Minikube Minikube is a tool that allows you to run Kubernetes locally on your machine. It’s ideal for development and testing. To install Minikube: Install VirtualBox or another compatible virtualization tool. Install Minikube following the instructions on Minikube's official site. Sta

Introduction

In today’s world of software development, applications are no longer created as one single large program. Instead, they’re broken into smaller pieces or units called microservices, which run inside containers. But as you start running more containers, managing them can quickly become challenging. That’s where Kubernetes comes in.

This article introduces you to Kubernetes, covering what it is, explains why it’s essential, and how to get started with it!

Prerequisites

- A basic understanding on Containers and Docker basics, for this, you can check out my article on Understanding Docker: A beginner's guide to containerization

- Basic Command Line Knowledge, you should be comfortable using the terminal or command line interface (CLI)

- YAML basics and that's because Kubernetes configurations are written in YAML.

- Basic Networking Concepts. You should understand simple networking ideas, what an IP address is, and how ports work.

- A Willingness to Learn. Kubernetes introduces some new concepts, like pods, services, and deployments so having patience and a mindset to experiment will make the learning process enjoyable.

Now, let's dive right in!

What Is Kubernetes?

Kubernetes, often abbreviated as K8s, is an open-source container orchestration platform developed by Google. It's designed to automate the deployment, scaling, and management of containerized applications. With Kubernetes, developers can focus on writing code, while the platform handles the complexities of deploying and managing those applications in production.

Why Use Kubernetes?

As applications become more complex and microservices-based architectures gain popularity, the need for efficient management tools has increased. Here are some reasons to consider using Kubernetes:

- Scalability: Kubernetes provides powerful scaling options. You can easily scale your application up or down based on traffic or resource demand.

- Load Balancing: It automatically distributes network traffic across multiple containers, ensuring no single container is overloaded.

- Self-Healing: Kubernetes detects when a container fails and automatically restarts it or schedules it on a different node, ensuring high availability.

- Service Discovery: It simplifies the process of managing communication between services, allowing them to find each other easily.

- Seamless Updates: Kubernetes allows you to update your app with zero downtime.

Key Terms and Components in Kubernetes

When understanding Kubernetes, you're required to be familiar with these terms or core components. Here’s a breakdown of its key concepts:

1. Cluster

A Kubernetes cluster is a set of machines (called nodes) that run containerized applications. Each cluster has at least one master node and multiple worker nodes.

2. Node

A node is a physical or virtual machine in the cluster that runs applications. There are two types of nodes:

- Master Node: Manages the cluster, coordinating tasks and distributing workloads to worker nodes.

- Worker Node: Executes the applications and services.

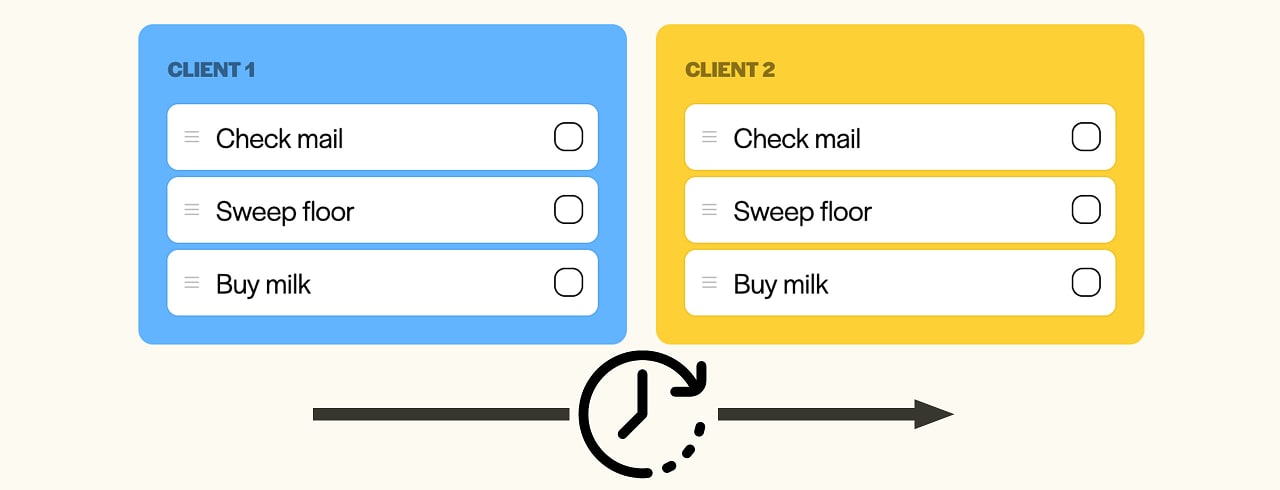

3. Pod

A pod is the smallest deployable unit in Kubernetes. It can contain one or more containers that share storage and network resources. Pods are typically used to run tightly coupled applications.

4. Deployment

A deployment is a Kubernetes resource that manages the lifecycle of your application. It defines how many replicas (copies) of a pod should be running at any given time and ensures that the desired state of the application is maintained.

5. Service

A service is an abstract way to expose an application running on a set of pods. It provides a stable endpoint for users to access the application and can handle load balancing and failover.

6. ConfigMap and Secret

- ConfigMap: Used to store non-sensitive configuration data for your application.

- Secret: Used to store sensitive information, such as passwords or API keys, ensuring they are securely managed.

How Kubernetes Works

Let’s walk through an example:

You have a web app and want to run it in 3 containers.

You create a deployment in Kubernetes, specifying the app’s configuration and that you want 3 replicas.

Kubernetes starts 3 pods (each containing your app’s container) on the available worker nodes.

A service is created to expose your app to users.

If one pod fails, Kubernetes automatically starts a new one to maintain 3 replicas.

Getting Started With Kubernetes

There are various ways to install Kubernetes, depending on your environment and requirements. Here are a few popular options for beginners:

1. Minikube

Minikube is a tool that allows you to run Kubernetes locally on your machine. It’s ideal for development and testing.

To install Minikube:

- Install VirtualBox or another compatible virtualization tool.

- Install Minikube following the instructions on Minikube's official site.

- Start your local Kubernetes cluster with

minikube start.

2. Kubectl

Kubectl is the command-line interface for interacting with your Kubernetes cluster. You can install it by following the instructions on the Kubernetes documentation site.

3. Cloud Providers

If you want to use Kubernetes in production, consider cloud providers that offer managed Kubernetes services, like:

- Google Kubernetes Engine (GKE)

- Amazon Elastic Kubernetes Service (EKS)

- Azure Kubernetes Service (AKS)

Deploying Your First Application

Now that you have a basic understanding of Kubernetes, let’s deploy a simple application. We’ll use a basic Nginx web server as an example.

Create a Deployment

Create a file named nginx-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

Apply the Deployment

Run the following command to create the deployment in your cluster:

kubectl apply -f nginx-deployment.yaml

Expose the Deployment as a Service

To make the Nginx deployment accessible, create a service:

kubectl expose deployment nginx-deployment --type=NodePort --port=80

Access Your Application

Get the URL to access your application:

minikube service nginx-deployment --url

Open this URL in your web browser, and you should see the default Nginx welcome page!

For further learning, here is a list of Kubernetes resources;

✨ Kubernetes Documentation

Kubernetes Docs

✨ Kubernetes Basics Interactive Tutorial

Kubernetes Basics

✨ Katacoda Kubernetes Scenarios

Katacoda Kubernetes

✨ Play with Kubernetes

Play with Kubernetes

✨ Kubernetes YouTube Channel

Kubernetes YouTube

✨ TechWorld with Nana

TechWorld with Nana

✨ Google Cloud Tech

Google Cloud Tech

✨ Coursera - Introduction to Kubernetes

Coursera Kubernetes

✨ Udemy - Kubernetes for Absolute Beginners

Conclusion

In this article, you've learned what Kubernetes is, why we use it, its key terms and concepts, how to install and get started with Kubernetes, and finally, how to deploy your first application on it.

Kubernetes is a powerful tool for managing containerized applications, offering numerous features for scaling, load balancing, and self-healing. While the learning curve may difficult at first, there are plenty of resources and a supportive community to help you along the way.

Embrace the journey, and happy coding! If you have any questions or need further details on specific topics, feel free to ask or comment your thoughts!

_Nils_Ackermann_Alamy.jpg?#)