Deploying Machine Learning Models: Best Practices and Future Trends

As of January 13, 2025, the deployment of machine learning (ML) models has become a pivotal aspect of the AI lifecycle, transforming theoretical models into practical applications that generate real-world value. Understanding Machine Learning Model Deployment Machine Learning Model Deployment refers to the process of integrating a trained ML model into a production environment where it can take in new data and provide predictions or insights. This phase is crucial because it allows organizations to leverage their data-driven models to make actionable business decisions. Why is Deployment Important? Real-Time Predictions: Deployment enables models to make predictions in real-time, which is essential for applications like fraud detection, recommendation systems, and autonomous vehicles. Operational Efficiency: Properly deployed models can automate processes, reducing manual effort and increasing efficiency. Business Value: The ultimate goal of developing ML models is to create business value. Deployment is the step that connects model development with tangible outcomes. Steps Involved in Deploying Machine Learning Models Deploying an ML model involves several critical steps that ensure the model functions effectively in a production environment. Below are the key stages involved: 1. Model Development and Training Before deployment, you need to build and train your ML model. This involves several sub-steps: Data Collection: Gather relevant data that will be used for training the model. Preprocessing: Clean and preprocess the data to ensure it is suitable for training. This may include handling missing values, normalizing data, and feature engineering. Model Selection: Choose an appropriate algorithm based on the problem at hand (e.g., regression, classification). Training: Train the model using labeled data and evaluate its performance using metrics like accuracy, precision, recall, or F1-score. 2. Model Evaluation Once the model is trained, it must be evaluated to ensure it meets performance standards before deployment. Cross-Validation: Use techniques like k-fold cross-validation to assess how well the model generalizes to unseen data. Hyperparameter Tuning: Optimize hyperparameters using methods such as grid search or random search to improve model performance. 3. Model Serialization After validating the model's performance, serialize it for deployment. Serialization involves saving the trained model in a format that can be loaded later for inference. Common Formats: Popular serialization formats include Pickle (Python), Joblib (for scikit-learn), and ONNX (Open Neural Network Exchange) for interoperability between frameworks. 4. Choosing a Deployment Environment Select an appropriate environment for deploying your model based on your application needs: Cloud Services: Platforms like AWS, Google Cloud Platform (GCP), and Microsoft Azure offer scalable infrastructure for deploying ML models. On-Premises Servers: For organizations with strict data privacy requirements, deploying on local servers may be preferred. Edge Devices: In scenarios requiring low latency (e.g., IoT devices), deploying models on edge devices can facilitate real-time predictions. 5. Containerization Containerization simplifies deployment by packaging your model along with its dependencies into a container. Docker: Use Docker to create a container image that includes the model files and necessary libraries. This ensures consistency across different environments. # Example Dockerfile FROM python:3.8-slim WORKDIR /app COPY requirements.txt . RUN pip install -r requirements.txt COPY . . CMD ["python", "app.py"] 6. Deploying the Containerized Model Once your model is containerized, deploy it using container orchestration tools for scalability and management. Kubernetes: Kubernetes can manage deployments at scale by handling load balancing and scaling based on demand. Steps for Deployment: Push your Docker image to a container registry (e.g., Docker Hub). Create a Kubernetes deployment configuration file specifying replicas and resource limits. Use kubectl commands to deploy your application. 7. Creating an API Endpoint To allow external applications to interact with your deployed model, create an API endpoint. Flask/FastAPI: Use frameworks like Flask or FastAPI in Python to create RESTful APIs that serve predictions from your model. from fastapi import FastAPI import joblib app = FastAPI() # Load the trained model model = joblib.load("model.pkl") @app.post("/predict") def predict(data: dict): prediction = model.predict([data["features"]]) return {"prediction": prediction.tolist()} 8. Testing and Validation Before fully launching your deployed model, conduct thorough testing: Unit Testing: Test in

As of January 13, 2025, the deployment of machine learning (ML) models has become a pivotal aspect of the AI lifecycle, transforming theoretical models into practical applications that generate real-world value.

Understanding Machine Learning Model Deployment

Machine Learning Model Deployment refers to the process of integrating a trained ML model into a production environment where it can take in new data and provide predictions or insights. This phase is crucial because it allows organizations to leverage their data-driven models to make actionable business decisions.

Why is Deployment Important?

- Real-Time Predictions: Deployment enables models to make predictions in real-time, which is essential for applications like fraud detection, recommendation systems, and autonomous vehicles.

- Operational Efficiency: Properly deployed models can automate processes, reducing manual effort and increasing efficiency.

- Business Value: The ultimate goal of developing ML models is to create business value. Deployment is the step that connects model development with tangible outcomes.

Steps Involved in Deploying Machine Learning Models

Deploying an ML model involves several critical steps that ensure the model functions effectively in a production environment. Below are the key stages involved:

1. Model Development and Training

Before deployment, you need to build and train your ML model. This involves several sub-steps:

- Data Collection: Gather relevant data that will be used for training the model.

- Preprocessing: Clean and preprocess the data to ensure it is suitable for training. This may include handling missing values, normalizing data, and feature engineering.

- Model Selection: Choose an appropriate algorithm based on the problem at hand (e.g., regression, classification).

- Training: Train the model using labeled data and evaluate its performance using metrics like accuracy, precision, recall, or F1-score.

2. Model Evaluation

Once the model is trained, it must be evaluated to ensure it meets performance standards before deployment.

- Cross-Validation: Use techniques like k-fold cross-validation to assess how well the model generalizes to unseen data.

- Hyperparameter Tuning: Optimize hyperparameters using methods such as grid search or random search to improve model performance.

3. Model Serialization

After validating the model's performance, serialize it for deployment. Serialization involves saving the trained model in a format that can be loaded later for inference.

- Common Formats: Popular serialization formats include Pickle (Python), Joblib (for scikit-learn), and ONNX (Open Neural Network Exchange) for interoperability between frameworks.

4. Choosing a Deployment Environment

Select an appropriate environment for deploying your model based on your application needs:

- Cloud Services: Platforms like AWS, Google Cloud Platform (GCP), and Microsoft Azure offer scalable infrastructure for deploying ML models.

- On-Premises Servers: For organizations with strict data privacy requirements, deploying on local servers may be preferred.

- Edge Devices: In scenarios requiring low latency (e.g., IoT devices), deploying models on edge devices can facilitate real-time predictions.

5. Containerization

Containerization simplifies deployment by packaging your model along with its dependencies into a container.

- Docker: Use Docker to create a container image that includes the model files and necessary libraries. This ensures consistency across different environments.

# Example Dockerfile

FROM python:3.8-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "app.py"]

6. Deploying the Containerized Model

Once your model is containerized, deploy it using container orchestration tools for scalability and management.

- Kubernetes: Kubernetes can manage deployments at scale by handling load balancing and scaling based on demand.

Steps for Deployment:

- Push your Docker image to a container registry (e.g., Docker Hub).

- Create a Kubernetes deployment configuration file specifying replicas and resource limits.

- Use

kubectlcommands to deploy your application.

7. Creating an API Endpoint

To allow external applications to interact with your deployed model, create an API endpoint.

- Flask/FastAPI: Use frameworks like Flask or FastAPI in Python to create RESTful APIs that serve predictions from your model.

from fastapi import FastAPI

import joblib

app = FastAPI()

# Load the trained model

model = joblib.load("model.pkl")

@app.post("/predict")

def predict(data: dict):

prediction = model.predict([data["features"]])

return {"prediction": prediction.tolist()}

8. Testing and Validation

Before fully launching your deployed model, conduct thorough testing:

- Unit Testing: Test individual components of your application (e.g., API endpoints) to ensure they work as expected.

- Integration Testing: Validate that different parts of your system work together seamlessly.

- Load Testing: Simulate high traffic conditions to assess how well your system handles multiple requests.

9. Monitoring and Maintenance

Once deployed, continuous monitoring is essential to ensure optimal performance:

- Performance Metrics: Track key metrics such as response time, throughput, error rates, and resource utilization.

- Model Drift Monitoring: Monitor for changes in data patterns over time that may affect model performance (known as concept drift).

- Logging: Implement logging mechanisms to capture errors and track usage patterns for further analysis.

Tools for Monitoring:

- Prometheus/Grafana: Use Prometheus for monitoring metrics and Grafana for visualizing those metrics on dashboards.

- ELK Stack (Elasticsearch, Logstash, Kibana): For logging and analyzing application logs.

Challenges in Machine Learning Model Deployment

While deploying ML models offers numerous benefits, several challenges must be addressed:

- Scalability Issues: Ensuring that your deployment can handle varying loads without degradation in performance requires careful planning and resource allocation.

- Data Privacy Concerns: Handling sensitive data necessitates compliance with regulations such as GDPR or HIPAA.

- Model Management Complexity: As more models are deployed, managing versions and ensuring consistency across deployments can become complex.

- Integration with Existing Systems: Seamlessly integrating ML models into existing workflows or applications may require additional development efforts.

Best Practices for Successful Deployment

To maximize the success of your ML deployment efforts:

- Automate CI/CD Pipelines: Implement continuous integration/continuous deployment (CI/CD) pipelines for automating testing and deployment processes.

- Version Control Models: Use version control systems like Git or DVC (Data Version Control) to track changes in models and datasets over time.

- Conduct Regular Audits: Periodically review deployed models for performance issues or biases that may have emerged since deployment.

- Engage Stakeholders Early On: Collaborate with stakeholders throughout the development lifecycle to ensure alignment with business goals.

Future Trends in Machine Learning Model Deployment

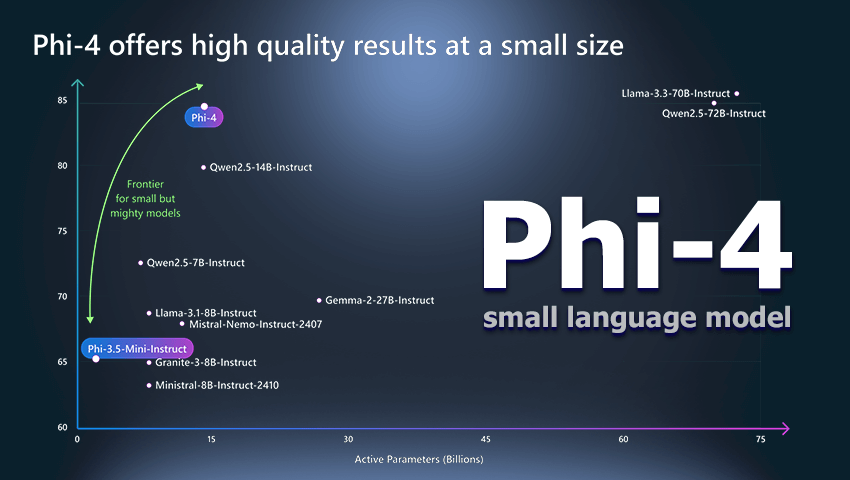

As we move forward into an increasingly AI-driven world, several trends are likely to shape the future of ML model deployment:

- Increased Use of MLOps Practices: MLOps combines machine learning with DevOps practices to streamline workflows from development through deployment while ensuring collaboration between teams.

- Federated Learning Approaches: Federated learning allows models to be trained across decentralized devices while keeping data localized—enhancing privacy while still benefiting from collective learning.

- Explainable AI (XAI): As transparency becomes more critical in AI applications, explainable AI techniques will help demystify how models make decisions—building trust among users.

- Serverless Architectures: Serverless computing will gain traction as organizations seek cost-effective ways to deploy scalable applications without managing server infrastructure directly.

Conclusion

Deploying machine learning models is a multifaceted process that requires careful planning, execution, and ongoing management to realize their full potential in real-world applications. By following best practices throughout each stage—from development through monitoring—organizations can effectively harness ML technology's power while navigating its complexities responsibly.

As we continue exploring innovative solutions powered by artificial intelligence in various sectors—healthcare, finance, transportation—the importance of robust deployment strategies cannot be overstated; they serve as the bridge between theoretical advancements in machine learning research and practical applications that drive meaningful change across industries!

Written by Hexadecimal Software and Hexahome